Hacking and Securing Machine Learning Systems and Environments

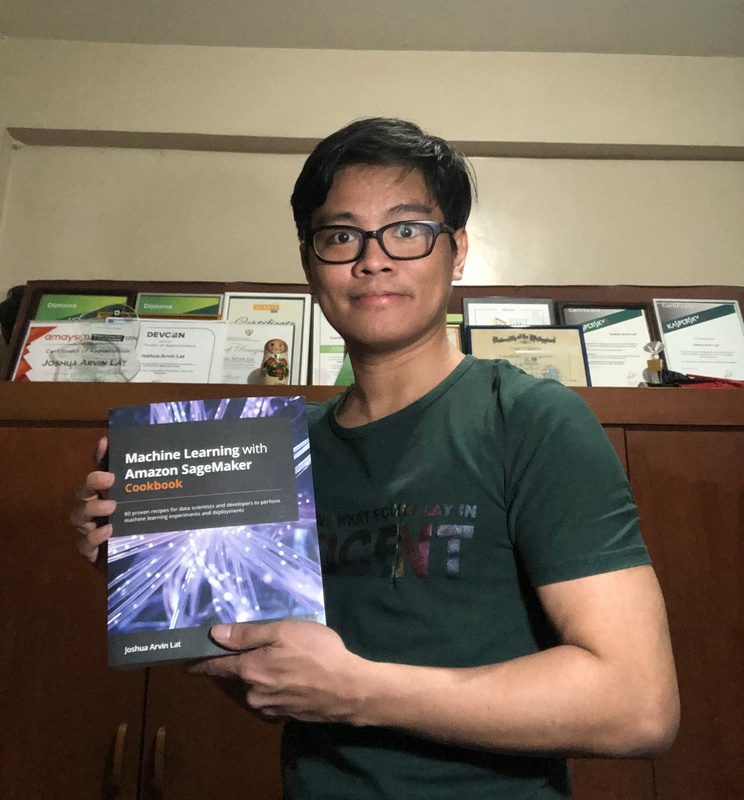

Joshua Arvin Lat is the Chief Technology Officer (CTO) of NuWorks Interactive Labs, Inc. He previously served as the CTO of 3 Australian-owned companies and also served as the Director for Software Development and Engineering for multiple e-commerce startups in the past which allowed him to be more effective as a leader. Years ago, he and his team won 1st place in a global cybersecurity competition with their published research paper. He is also an AWS Machine Learning Hero and he has been sharing his knowledge in several international conferences to discuss practical strategies on machine learning, engineering, security, and management. He is the author of the book "Machine Learning with Amazon SageMaker Cookbook"

Abstract

It is not an easy task to design and build machine learning systems. ML practitioners deploy ML models by converting some of their Jupyter Notebook Python code into production-ready application code. Once these ML systems have been set up, they need to be secured properly to manage vulnerabilities and exploits. There are different ways to attack ML systems and most data science teams are not equipped with the skills required to secure these systems. We will discuss in detail several strategies and solutions on how to secure these systems. In this session, we will review several attacks customized to take advantage of vulnerabilities present in Python libraries such as Joblib, urllib, and PyYAML. In addition to these, we'll check possible attacks on ML inference endpoints built using frameworks such as Flask, Pyramid, or Django. Finally, we will talk about several examples on how ML environments using ML frameworks (such as TensorFlow and PyTorch) can be attacked and compromised.

Description

Video

Location

R1

Date

Day 1 • 10:45-11:15 (GMT+8)

Language

English talk

Level

Experienced

Category

Security